High Performance Computing Testbed

Motivation

Modern and fast hardware is the basis of applied research, especially in the field of AI/ML applications. The High Performance Computing Testbed (HPC) of the DAI-Labor offers both researchers as well as students the necessary resources to put their innovative ideas into practice. Through the HPC, they benefit from current powerful servers with the ability to efficiently process even large amounts of photo and video data.

Technology

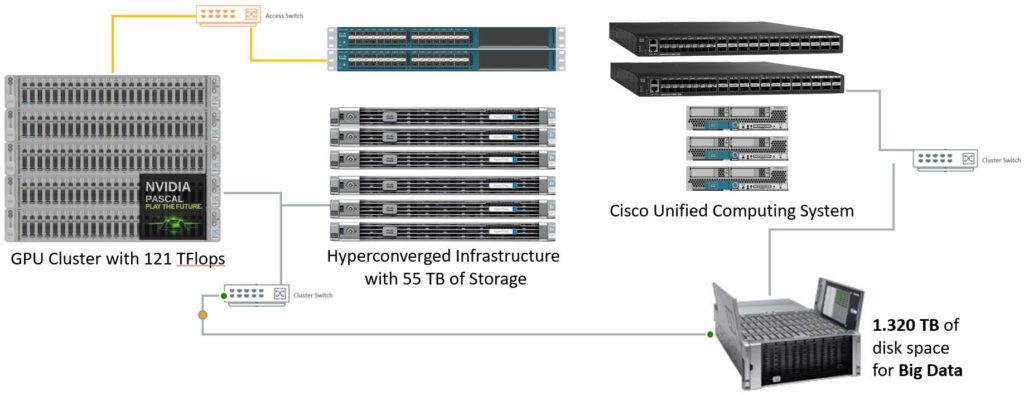

The HPC is a powerful tool for carrying out computationally intensive research work. The different components, which are coordinated with each other and with this scenario, allow to train even own complex models with machine learning. In the area of BigData, many data sets exceed the capacity of a single system. Therefore, HPC consists of many different components, which are interconnected via an internal network with high bandwidth as required. Several data storage devices can be used to store data, but still be available at any time via the high-speed network where they are needed.

The HPC cluster consists of CPU and GPU nodes, which are supplemented by a storage system. We rely on an HPC cluster with NVIDA Pascal chips, which are particularly suitable for high performance computing and deep learning thanks to their high computing power and efficiency. A total of 6 GPU nodes with 12 Nvidia P100 or V100 cards are available. Together there are over 46,000 GPU cores and theoretical 121 TFLOPS for single precision arithmetic available for calculations. In order to be able to store even the largest data, the researchers have storage systems with more than 1 petabyte of storage space at their disposal.